BLOG

FAKE CROW'S BLOG

User Testing for Mobile Apps: Case Study

Most luminaries in the tech world will tell you that thorough testing can mean the difference between runaway success and abject failure. User testing allows brands to take a step back from their product and see it for what it really is, not just what they want it to be. Most startups, however, do not have the time or resources to carry it out on their own. That’s why we were thrilled when the founder and CEO of a soon to launch social media app came to us in the very early stages of their product to carry out some usability testing for them. Here’s how we conducted our test, and the conclusions we were able to draw from it.

Need help running usability tests for your app or website?

Let’s Talk

Getting Started

Before beginning any user test, it is critical to formulate a plan. What do your collaborators want to get out of the test? What are the goals of the test itself? Remember that when building out your objectives, the highest priority should be improving the user’s experience, not catering to the app’s builders.

In our case, the stakeholders wanted us to test three things:

1. The appeal of their app’s core concept, and its clarity to the user

2. The overall usability of their app

3. How the app resonated with their target audience

We’ll come back to that last point in the next section. Once we had a sense of our partner’s desires for the test, we put together a list of goals based on their asks. These are indicative of our specific research objectives, and what exactly we wanted to learn, to best be able to improve the app.

– Test the app’s usability, focusing on specific functions

– Gather micro-reactions and quotes from the testers

– Reveal larger experience problems, pain points, and friction areas

– Gauge effectiveness and clarity of product’s message

– Gauge users’ interest in returning to the app

– Develop a list of recommendations for UX/UI improvements

Screening to Match the Ideal Target User

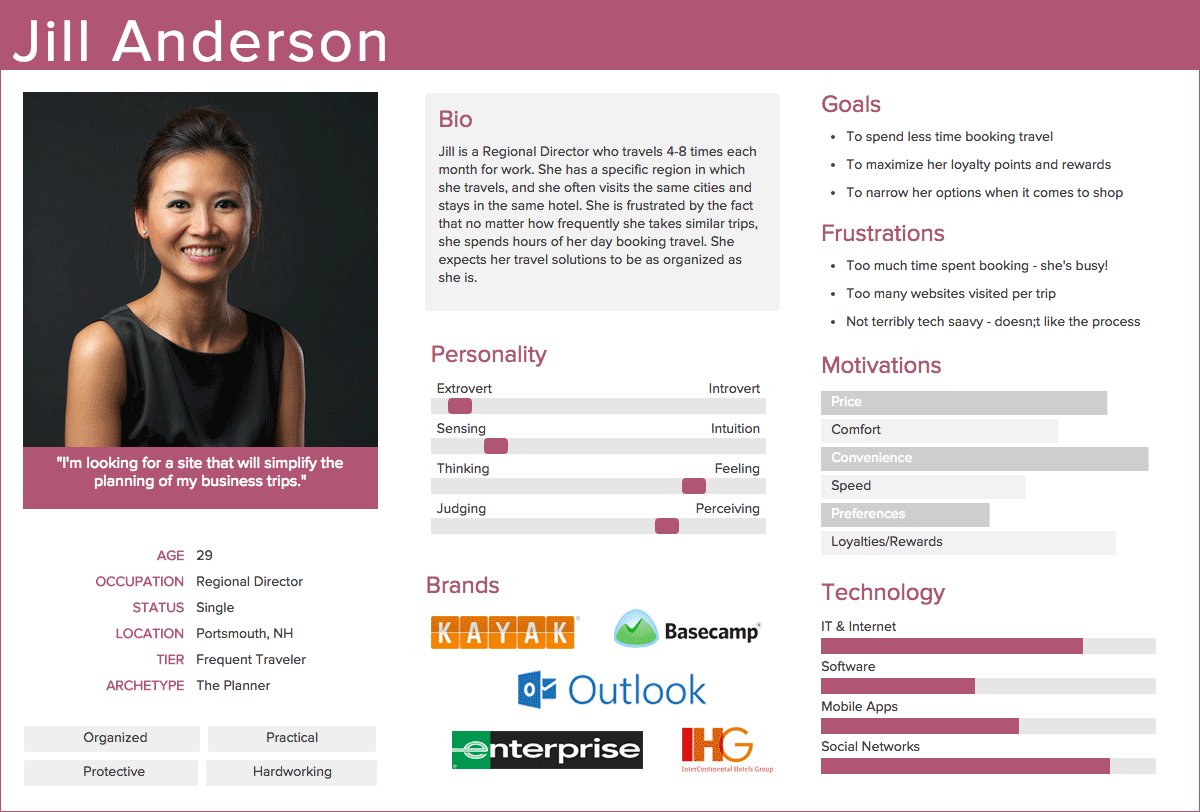

Example user personas embody our ideal audience groups.

Making decisions from results of a user test carried out on the wrong target audience is like judging an elephant on how good it is at climbing trees. Your product or service might have an incredible value proposition, but it means nothing if you don’t reach the right people. That in mind, the logical next step in user testing is identifying and learning about your target audience.

We had our client use our startup toolkit Xtensio to create Personas for their desired user types. This gave them an easy way to build a full, fleshed out user profile, and gave us the information we needed to begin screening applicants.

Conducting both qualitative and quantitative research on your market is crucial in realizing key pain points of your target audience. Need help discovering these insights? Let’s chat.

We designed a 9 question survey based on the company’s desired target market, and sent it to potentially interested users. Five of the questions were demographic in nature, and the other four specifically pertained to whether their habits, interests and app usage aligned with our partner’s goals. To reach a diverse and strong group of applicants, we distributed the survey through personal networks, university groups, job forums, and even Craigslist.

The reaction was impressive – we received 68 responses in 24 hours. From that 68, we reached out to the 30 participants that best fit our criteria and confirmed the first 20 that responded to begin usability testing. It is worth mentioning that in usability testing, only 5 testers in total are actually needed to get enough relevant findings to enact meaningful changes to your app. We decided to go with a larger group so that we could gauge market fit and resonance with the target audience as well as conducting traditional user testing.

The Testing Process

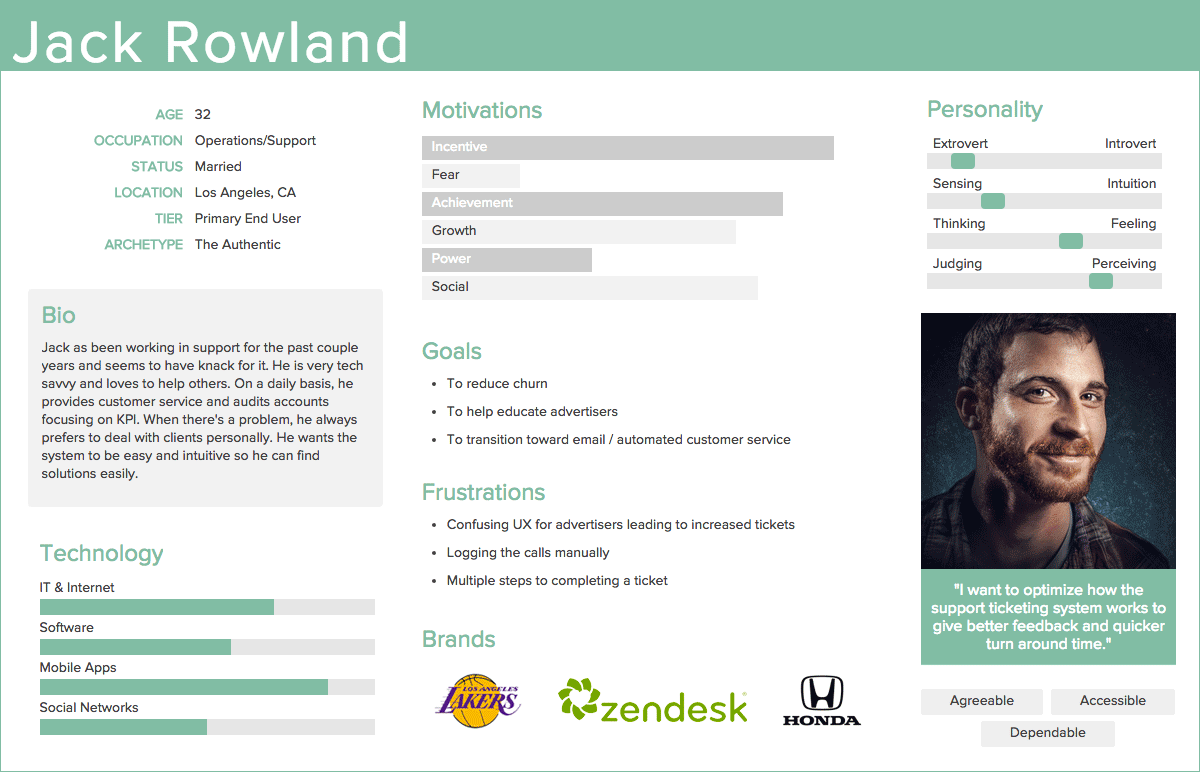

Usability testing environment: one webcam facing the participant, one document camera facing the phone screen.

In mobile app testing, the smallest of reactions like the raising of an eyebrow or hesitation of the finger can speak volumes to the user’s experience. Users should be prepared to narrate their entire use experience, and you need to have systems in place to record their use and their reactions. It is also crucial to provide some structure without micromanaging. That way you’re guaranteed useful, yet honest and unbiased results. Asking too many questions can quickly turn into leading the participants to conclusions they may not have drawn otherwise.

We had a moderator and a note taker in the office with our participants, and also used two cameras to record* them. One captured the phone’s screen and their finger motions, and the other was focused on their face to record any interesting reactions or statements.

The moderator stuck to an agenda of suggestions, helpful enough to guide users through the app, but broad enough to leave most of the discovery process up to them without coloring their impressions. The Agenda was separated into four distinct phases.

First, we gave users a disclaimer about the app, informed them that Fake Crow was not affiliated with the app’s developers, and gave them a tagline for the product. Then — after some time using the app unguided and narrating their responses — we asked the users to carry out a few specific tasks within the app. When testing was complete, we asked the users five questions. These questions were designed to further probe the users’ understanding of the app and its concept, their frustrations, desires, likes and dislikes. Finally, we came up with a new step not normally carried out in user testing. One week after the test, we followed up with our users to further gauge what they remembered about the product and their impressions. All that left was compiling the results.

Organizing and Analyzing the Results

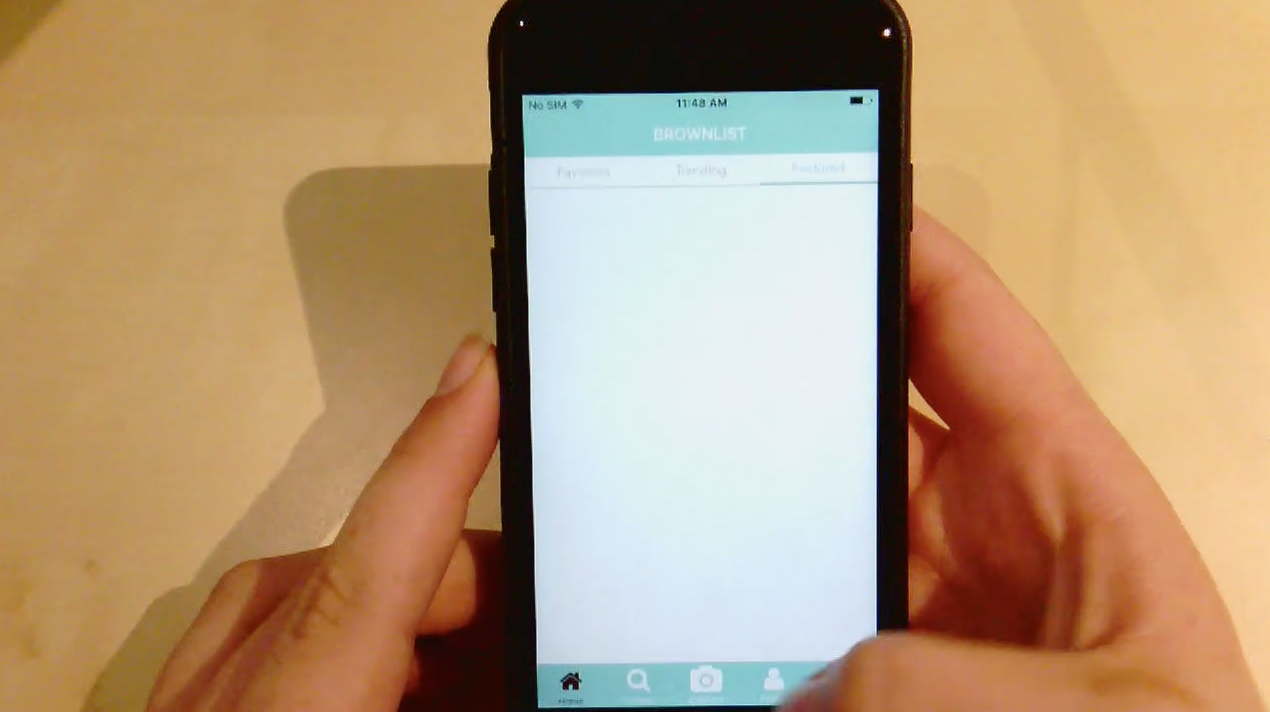

Screenshots from the two angles of video recorded during testing.

Once you have all your tester data, the real work begins. This is when you have to sift through all your notes and videos to identify common reactions, frustrations, and pain points. Once you have identified some trends, you can begin sorting everything into buckets to get meaningful results. These results, the actual hard data, are what any and all recommendations for improvements should be based on.

By compiling all of our notes and videos, we created a standardized set of reactions to the test that we noticed as reoccurring. We grouped these reactions into five distinct sets of pain points — one general, four associated with specific app features, and one formed from our follow up questions. Some truly significant results came out of this, like all 20 of our testers enjoying a particular feature while completely missing its intended function, or all but two finding one of the app’s core features annoying and intrusive.

We then compiled our results into an easily digestible report for the app company to refer back to. The report was structured into seven sections:

– Executive summary

– Methods

– Findings

– Raw data

– Conclusions

– Recommendations

– Quotes

Recommending Fixes

Once all the results from testing are in, it’s time to figure out how to improve the app based on user feedback. This is possibly the most important thing to remember about user testing in general — all recommendations and improvements should be based of off real reactions and results from the test, not on the personal opinions of the moderators, or what the developers want to see.

When our test users were confused or frustrated by features, or when they had specific complaints about particular functions, we leveraged our expertise in UX design and strategy to recommend fixes that would provide elegant solutions. Each recommendation was meant to effect large improvements through small changes, and we especially highlighted things that needed immediate attention, such as obvious bugs or features that were generally disliked. We provided high level ideas for UX optimization, always building off of what was already present to avoid a complete overhaul.

We complemented our recommendations with direct quotes from our users illustrating their impressions of the application. These gave the stakeholders a personal perspective and provide a valuable resource for their app’s development. We also cataloged known bugs encountered during user testing.

So what does it all mean?

We drew a number of meaningful conclusions from our mobile app testing sessions. The test itself was extremely revealing, showing us many areas of the app that could see improvement, and gathering lots of good suggestions. Testing to reaffirm or contradict your ideas is make or break in the startup world, and the best strategy is often to build out the barebones features of your MVP, test, iterate, and repeat. It is doubtful that a developer carrying out their own user testing would have seen similar results.

The importance of having a third party carry out the user testing was apparent through the entire test process. Our independence from the stakeholders allowed us to obtain real data, unfettered by biased creators or users hesitant to react honestly, while also saving them a ton of work. In the real world, users are brutally honest and genuine, which can be difficult to replicate when an app’s creators are in the room. Additionally, founders and developers can sometimes dive so deep into their own work that it becomes difficult to identify issues that seem glaringly obvious to an outside individual.

Users need to be front and center through the entire process. Their satisfaction is paramount to the success of an application — happy users mean successful apps, plain and simple. To keep the user happy, biases need to be abandoned, and brutal honesty on all sides is a necessity. Investing in the optimization of your UX/UI can lead to transformative results.

With the time and resources usability testing demands, it can often be seen as a luxury. That’s why we run our usability tests as efficiently as possible, with the aim of confirming or denying assumptions the stakeholders are making. At the end of the day, we’re here to help entrepreneurs course correct as swiftly as possible, without any wasted effort.

Need to test your application and want real, effective results and recommendations?

or email: [email protected]

*When recording testers, it is best practice to make them aware of it. Have them sign a simple release form to cover your bases.